Data Science and Machine Learning Series- Recurrent Neural Networks

Data Science and Machine Learning Series- Recurrent Neural Networks Free Tutorial Download

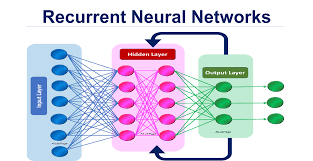

Recurrent neural networks are very famous deep learning networks which are applied to sequence data: time series forecasting, speech recognition, sentiment classification, machine translation, Named Entity Recognition, etc..

The use of feedforward neural networks on sequence data raises two majors problems:

- Input & outputs can have different lengths in different examples

- MLPs do not share features learned across different positions of the data sample

In this article, we will discover the mathematics behind the success of RNNs as well as some special types of cells such as LSTMs and GRUs. We will finally dig into the encoder-decoder architectures combined with attention mechanisms.

NB: Since Medium does not support LaTeX, the mathematical expressions are inserted as images. Hence, I advise you to turn the dark mode off for a better reading experience.

Table of content

- Notation

- RNN model

- Different types of RNNs

- Advanced types of cells

- Encoder & Decoder architecture

- Attention mechanisms

Download Data Science and Machine Learning Series- Recurrent Neural Networks Free

https://xmbaylorschool-my.sharepoint.com/:u:/g/personal/mossh_baylorschool_org2/EQc_PpoRwT1GmQiWx_2jlFsBzDdket4jQWZS0MXiUCKB7g

https://uptobox.com/962cw2fzdqjr

https://drive.google.com/file/d/1nsvuPd8j04SkqlbPqVql7xlCfjQnIRmA/view?usp=sharing

https://anonfiles.com/h40ax1L0o1